Giving Microsoft's AI Experimentation Platform a makeover

Project Overview

I redesigned ZebraAI, Microsoft's AI experimentation platform, to make prompt engineering accessible to 17,000 support engineers worldwide, not just AI experts.

Responsibility

Redesigned ZebraAI's core workflows, simplifying navigation (11→5 tabs), experiment creation (8→5 steps), and search to improve usability across 17,000 users.

Role & Team:

Lead UX Designer collaborating with 1 researcher, 1 PM, 2 engineers, and Microsoft advisors including a Principal Engineer, Data Science Manager, and UX Design Lead.

Giving Microsoft's AI Experimentation Platform a makeover

Project Overview

I redesigned Z.AI, Microsoft's AI experimentation platform, to make prompt engineering accessible to 17,000 support engineers worldwide, not just AI experts.

Responsibility

Redesigned Z.AI's core workflows, simplifying navigation (11→5 tabs), experiment creation (8→5 steps), and search to improve usability across 17,000 users.

Role & Team:

Lead UX Designer collaborating with 1 researcher, 1 PM, 2 engineers, and Microsoft advisors including a Principal Engineer, Data Science Manager, and UX Design Lead.

Our Impact on Z.AI

20 → 5 min

decrease in experiment creation time

74% reduction

in website drop-offs

50% increase

in user satisfaction score

The Problem

Z.AI is…

An internal Microsoft tool that lets engineers experiment with prompt engineering using secure customer support data and Azure OpenAI.

An internal Microsoft tool that lets engineers experiment with prompt engineering using secure customer support data and Azure OpenAI.

Z.AI is also…

difficult to use. Navigation was unclear, search failed, and the 8-step creation process was so confusing that users just copied existing experiments instead.

difficult to use. Navigation was unclear, search failed, and the 8-step creation process was so confusing that users just copied existing experiments instead.

Why does Z.AI matter?

What's at stake

Why does Z.AI matter?

What's at stake

Without a usable interface, Z.AI's potential goes unrealized. Poor adoption prevents Microsoft from scaling AI experimentation across 17,000 support engineers. Teams build redundant experiments in isolation. Manual compliance reviews create security risks. And Microsoft risks falling behind competitors who make AI tools more accessible

Without a usable interface, Z.AI's potential goes unrealized. Poor adoption prevents Microsoft from scaling AI experimentation across 17,000 support engineers. Teams build redundant experiments in isolation. Manual compliance reviews create security risks. And Microsoft risks falling behind competitors who make AI tools more accessible

The opportunity

The opportunity

Fixing ZebraAI's UX unlocks three key benefits:

Enhance Support Efficieny

Streamlined workflows mean faster experiment creation and quicker problem resolution.

Empower Support Teams:

Accessible design expands who can use AI tools, not just technical experts.

Enable AI at Scale

Making the platform usable for 17,000 engineers strengthens Microsoft's AI leadership.

The current design is outdated

Home page

Home page

The old homepage was cluttered with unnecessary tabs and text overload, making it hard for users to find core actions.

The old homepage was cluttered with unnecessary tabs and text overload, making it hard for users to find core actions.

AI Chat page

AI Chat page

The AI Chat overloaded users with text and technical terms like “temperature” and “top-p value.”

The AI Chat overloaded users with text and technical terms like “temperature” and “top-p value.”

Create page

Create page

The experiment creation flow had eight steps and no clear guidance, overwhelming new users.

The experiment creation flow had eight steps and no clear guidance, overwhelming new users.

Help page

Help page

The FAQ page was text-heavy and overwhelming, burying critical guidance in long paragraphs.

The FAQ page was text-heavy and overwhelming, burying critical guidance in long paragraphs.

User Research

Who we talked to (Personas)

User Research

Who we talked to (Personas)

Our research revealed two distinct user groups that shaped the design challenge:

Our research revealed two distinct user groups that shaped the design challenge:

→ Creators pushed the limits of Z.AI but struggled with its steep learning curve, often losing time to trial-and-error.

→ Creators pushed the limits of Z.AI but struggled with its steep learning curve, often losing time to trial-and-error.

→ Consumers valued efficiency and quick wins but were frustrated when the platform prioritized flexibility over clarity.

→ Consumers valued efficiency and quick wins but were frustrated when the platform prioritized flexibility over clarity.

The UX of a survey

The UX of a survey

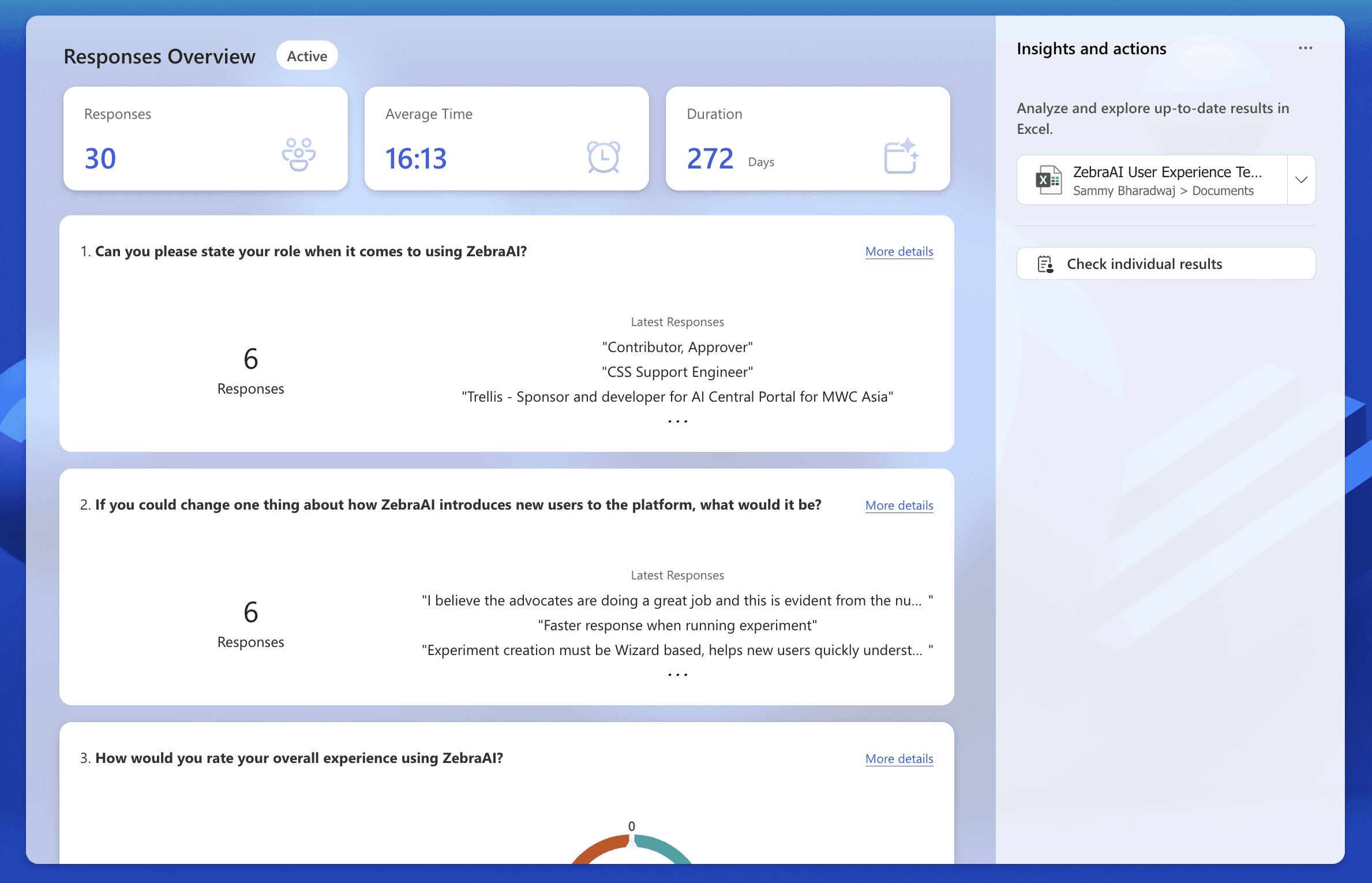

I collaborated with my UX manager to design a survey exploring user behaviors and pain points. Through multiple rounds of refinement, we focused on asking fewer, higher-impact questions to maximize response rates and data quality.

I collaborated with my UX manager to design a survey exploring user behaviors and pain points. Through multiple rounds of refinement, we focused on asking fewer, higher-impact questions to maximize response rates and data quality.

Key findings:

60% manually verify AI outputs, revealing users don't trust the platform's results

60% manually verify AI outputs, revealing users don't trust the platform's results

56% needed 4+ experiments before mastering the creation process

56% needed 4+ experiments before mastering the creation process

The platform needs both transparency improvements (to build trust) and workflow simplification (to reduce learning curve)

The platform needs both transparency improvements (to build trust) and workflow simplification (to reduce learning curve)

Actually talking to users

Actually talking to users

What we discovered: One platform, two completely different user needs

What we discovered: One platform, two completely different user needs

"The UI is the biggest pain point. Productivity is lost due to poor search functionality and lack of documentation."

"The UI is the biggest pain point. Productivity is lost due to poor search functionality and lack of documentation."

"I don't want to build experiments. I just want to find one that solves my problem."

"I don't want to build experiments. I just want to find one that solves my problem."

"This looks fine, but I have no idea what 'temperature value' means. Should I change it?"

"This looks fine, but I have no idea what 'temperature value' means. Should I change it?"

Consumer seeking quick solutions

Creator struggling with platform complexity

Creator struggling with platform complexity

Former User who abandonded the platform

Why did we lose our users?

The platform was designed for technical Creators, but 60% of users were Consumers who needed simplicity, not flexibility. This mismatch drove users away.

The platform was designed for technical Creators, but 60% of users were Consumers who needed simplicity, not flexibility. This mismatch drove users away.

Opportunities

I guided my team to four main areas for redesign

Opportunities

I guided my team to four main areas for redesign

User research revealed four critical barriers. I translated each into a design solution:

User research revealed four critical barriers. I translated each into a design solution:

🏠 Homepage & Navigation

Simplified navigation from 11 tabs to 5 core actions to reduce decision paralysis

🔬 Experiment Creation

Reduced creation from 8 confusing steps to 5 guided steps with clear progress indicators

🔬 Experiment Creation

Reduced creation from 8 confusing steps to 5 guided steps with clear progress indicators

🔍 Search & Discovery

Introduced category-based organization and smarter filtering to help users find experiments faster

📚 User Guidance

Added contextual help, tooltips, and onboarding to guide users through complex workflows

Design Process

Translating research into design

Design Process

Translating research into design

I took our research insights and turned them into low-fidelity wireframes, then refined them into high-fidelity prototype

I took our research insights and turned them into low-fidelity wireframes, then refined them into high-fidelity prototype

→

Refining through feedback

Refining through feedback

After creating high-fidelity prototypes, I met with my UX manager and users to review the designs. His feedback was very valuable and helped shape the final direction:

Experiment creation wasn't intuitive —

Users couldn't tell what each step was for or if steps were required

AI chat felt disconnected —

The small text box needed to become an immersive, full-page experience

Language was too technical —

Engineering terms like "temperature" confused non-technical users

Guidance was missing —

No onboarding or contextual help to orient new users

After creating high-fidelity prototypes, I met with my UX manager and users to review the designs. His feedback was very valuable and helped shape the final direction:

Experiment creation wasn't intuitive — Users couldn't tell what each step was for or if steps were required

AI chat felt disconnected — The small text box needed to become an immersive, full-page experience

Language was too technical — Engineering terms like "temperature" confused non-technical users

Guidance was missing — No onboarding or contextual help to orient new users

Accelerating exploration with AI

Accelerating exploration with AI

Being the designer, I wanted to help out my team as well as use new AI tools to help me prototype some of the designs. I used Claude to help with research and analysis, Figma make to help ideate and Cursor to implement

Being the designer, I wanted to help out my team as well as use new AI tools to help me prototype some of the designs. I used Claude to help with research and analysis, Figma make to help ideate and Cursor to implement

Claude

Synthesized user research findings and identified patterns across interview data to prioritize design improvements.

Cursor

Generated HTML/CSS layout mockups to rapidly test different navigation and component approaches through MCP.

Figma Make

Created UI component variations to explore visual styles, spacing, and layout options quickly.

Claude

Synthesized user research findings and identified patterns across interview data to prioritize design improvements.

Cursor

Generated HTML/CSS layout mockups to rapidly test different navigation and component approaches through MCP.

Figma Make

Created UI component variations to explore visual styles, spacing, and layout options quickly.

Final Designs

A more welcoming home page

Final Designs (Tap cards to expand)

A more welcoming home page

I reduced sidebar to five tabs, added a global search, and surfaced the three most important CTAs (Explore, Create, Chat). Added a Social Hub for community updates.

I reduced sidebar to five tabs, added a global search, and surfaced the three most important CTAs (Explore, Create, Chat). Added a Social Hub for community updates.

Seamless experiment creation

Seamless experiment creation

The redesign reduced steps to five with a wizard UI, added guidance text and tutorial videos, and introduced progress tracking.

The redesign reduced steps to five with a wizard UI, added guidance text and tutorial videos, and introduced progress tracking.

An immersive AI chat experience

An immersive AI chat experience

I simplified the layout, moved the chat input to the bottom for immersion, and added tooltips to explain advanced settings.

I simplified the layout, moved the chat input to the bottom for immersion, and added tooltips to explain advanced settings.

Learning resouces, made easily accessible

Learning resouces, made easily accessible

Transformed it into a new Learn page with clear sections for tutorials, FAQs, community discussions, and office hour recordings. Used visuals and structured layouts instead of walls of text.

Transformed it into a new Learn page with clear sections for tutorials, FAQs, community discussions, and office hour recordings. Used visuals and structured layouts instead of walls of text.

Capstone showcase

For the Informatics Capstone showcase, we set up a display with a poster, prototype walkthrough video, and a video prototype. I designed the poster layout, filmed the walkthrough, helped create the prototype video, and presented the features I led along with the ideation phase. Our design received positive feedback, and we had great conversations with judges and guests throughout the event.

For the Informatics Capstone showcase, we set up a display with a poster, prototype walkthrough video, and a video prototype. I designed the poster layout, filmed the walkthrough, helped create the prototype video, and presented the features I led along with the ideation phase. Our design received positive feedback, and we had great conversations with judges and guests throughout the event.

Next Project

Streamlining the reimbursement processes in Quickbooks boosting efficiency for small businesses